Queen Mary University of London Leads Global Conversation on AI Policy

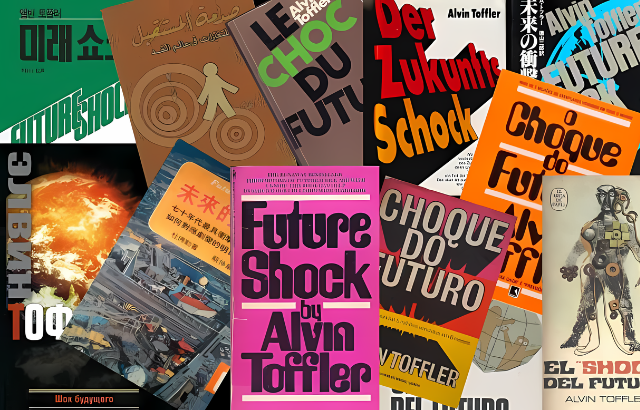

Leading thinkers from around the world convened to identify and tackle the ethical and policy challenges posed by the explosive rise of GenAI. Referencing Alvin Toffler’s influential 1970 book Future Shock, the event asked: “Has the meteoric rise of generative AI (GenAI) triggered ‘future shock’ – a societal struggle to adapt to the breakneck pace of change?”

The event, led by Queen Mary University of London’s Professor David Leslie (Digital Environment Research Institute (DERI) and The Alan Turing Institute), featured a range of thought-provoking insights from leading figures in the field:

- Professor David Leslie highlighted the “disturbing combination of familiarity and otherness” that GenAI presents. He highlighted the potential for “computational Frankensteins,” stressing the importance of broadening regulatory activities and fostering responsible AI practices.

- Professor Xiao-Li Meng (Harvard University) emphasised that policy will play a crucial role in ensuring AI is used for good, as “there’s nothing artificial about AI – it’s created by people and trained on human data.”

- Antonella Maia Perini (Research Associate, The Alan Turing Institute, UK) stressed the importance of the Policy Forum “for different prospects to be connected together on global AI policy and governance.”

- Professor Yoshua Bengio (Professor, Université de Montréal, Founder and Scientific Director, Mila, Canada) warned of the potential for AI to act on its own goals: “We urgently need to invest in finding scientific solutions to ensure powerful AI systems are secure and can’t be abused. These systems might become smarter than humans, and we don’t yet know how to create AI that won’t harm us if it prioritises self-preservation.”

- Nicola Solomon (Chair, Creators Rights Alliance, UK) raised concerns about the impact of GenAI on the creative industry, including ethical and financial considerations. She stressed the need for transparency in how creative works are used, credited and paid to train AI systems.

- Dr Ranjit Singh (Senior Researcher, Data & Society, US) discussed the limitations of “red-teaming” as a strategy for governing large language models (LLMs), highlighting the need for a more comprehensive approach.

- Dr Jean Louis Fendji (Research Director, AfroLeadership, Cameroon) argued that the “unconnected” will be disproportionately affected by GenAI, and called for urgent action to bridge the digital divide.

- Shmyla Khan (Digital Rights Foundation) pushed back against “policy panics” surrounding GenAI, advocating for a more nuanced approach that considers the needs of the Global South.

- Tamara Kneese (Data & Society Research Institute’s Algorithmic Impact Methods Lab) explored the wider societal risks and impacts of generative AI: “The environmental costs of GenAI are significant. We need to reframe the scope of AI research and development to consider its carbon footprint and resource consumption throughout its lifecycle.”

- Rachel Coldicutt OBE (Founder & Executive Director, Careful Industries, UK) delved into the power dynamics surrounding AI narratives, particularly the media’s focus on “existential risks” of AI. She argued for a more nuanced approach to communicating the complexities of AI’s social impacts.

- Smera Jayadeva (Researcher, The Alan Turing Institute, UK) questioned “who actually has their hands on the wheel” when it comes to GenAI innovation, highlighting the importance of democratic control and public interest in shaping the future of AI.

Professor David Leslie and researchers from The Alan Turing Institute’s Ethics and Responsible Innovation team also unveiled a new interactive platform and workbooks. This initiative aims to empower the public sector to develop and implement responsible AI practices.

The event concluded with a call for a global response to GenAI. Answering questions from the audience, panelists stressed the need for international cooperation, investment in AI safety research, and regulations that prioritise public good. David Leslie highlighted the “problem of dual use” – the potential weaponisation of GenAI – as an area demanding immediate attention.

Queen Mary’s forum served as a springboard for further dialogue and action. As GenAI continues to evolve, a global conversation around responsible development and deployment is more critical than ever.