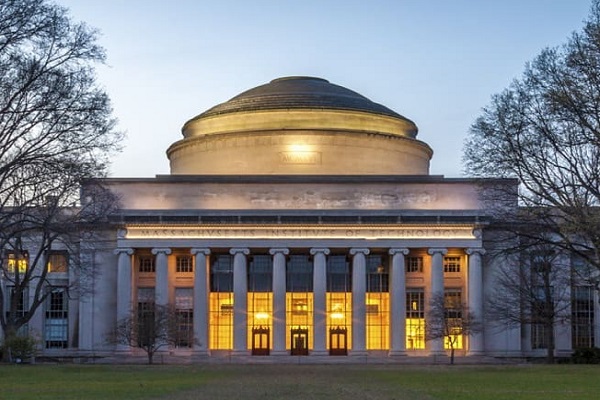

Massachusetts Institute of Technology: Deploying machine learning to improve mental health

A machine-learning expert and a psychology researcher/clinician may seem an unlikely duo. But MIT’s Rosalind Picard and Massachusetts General Hospital’s Paola Pedrelli are united by the belief that artificial intelligence may be able to help make mental health care more accessible to patients.

In her 15 years as a clinician and researcher in psychology, Pedrelli says “it’s been very, very clear that there are a number of barriers for patients with mental health disorders to accessing and receiving adequate care.” Those barriers may include figuring out when and where to seek help, finding a nearby provider who is taking patients, and obtaining financial resources and transportation to attend appointments.

Pedrelli is an assistant professor in psychology at the Harvard Medical School and the associate director of the Depression Clinical and Research Program at Massachusetts General Hospital (MGH). For more than five years, she has been collaborating with Picard, an MIT professor of media arts and sciences and a principal investigator at MIT’s Abdul Latif Jameel Clinic for Machine Learning in Health (Jameel Clinic) on a project to develop machine-learning algorithms to help diagnose and monitor symptom changes among patients with major depressive disorder.

Machine learning is a type of AI technology where, when the machine is given lots of data and examples of good behavior (i.e., what output to produce when it sees a particular input), it can get quite good at autonomously performing a task. It can also help identify patterns that are meaningful, which humans may not have been able to find as quickly without the machine’s help. Using wearable devices and smartphones of study participants, Picard and Pedrelli can gather detailed data on participants’ skin conductance and temperature, heart rate, activity levels, socialization, personal assessment of depression, sleep patterns, and more. Their goal is to develop machine learning algorithms that can intake this tremendous amount of data, and make it meaningful — identifying when an individual may be struggling and what might be helpful to them. They hope that their algorithms will eventually equip physicians and patients with useful information about individual disease trajectory and effective treatment.

“We’re trying to build sophisticated models that have the ability to not only learn what’s common across people, but to learn categories of what’s changing in an individual’s life,” Picard says. “We want to provide those individuals who want it with the opportunity to have access to information that is evidence-based and personalized, and makes a difference for their health.”

Machine learning and mental health

Picard joined the MIT Media Lab in 1991. Three years later, she published a book, “Affective Computing,” which spurred the development of a field with that name. Affective computing is now a robust area of research concerned with developing technologies that can measure, sense, and model data related to people’s emotions.

While early research focused on determining if machine learning could use data to identify a participant’s current emotion, Picard and Pedrelli’s current work at MIT’s Jameel Clinic goes several steps further. They want to know if machine learning can estimate disorder trajectory, identify changes in an individual’s behavior, and provide data that informs personalized medical care.

Picard and Szymon Fedor, a research scientist in Picard’s affective computing lab, began collaborating with Pedrelli in 2016. After running a small pilot study, they are now in the fourth year of their National Institutes of Health-funded, five-year study.

To conduct the study, the researchers recruited MGH participants with major depression disorder who have recently changed their treatment. So far, 48 participants have enrolled in the study. For 22 hours per day, every day for 12 weeks, participants wear Empatica E4 wristbands. These wearable wristbands, designed by one of the companies Picard founded, can pick up information on biometric data, like electrodermal (skin) activity. Participants also download apps on their phone which collect data on texts and phone calls, location, and app usage, and also prompt them to complete a biweekly depression survey.

Every week, patients check in with a clinician who evaluates their depressive symptoms.

“We put all of that data we collected from the wearable and smartphone into our machine-learning algorithm, and we try to see how well the machine learning predicts the labels given by the doctors,” Picard says. “Right now, we are quite good at predicting those labels.”

Empowering users

While developing effective machine-learning algorithms is one challenge researchers face, designing a tool that will empower and uplift its users is another. Picard says, “The question we’re really focusing on now is, once you have the machine-learning algorithms, how is that going to help people?”

Picard and her team are thinking critically about how the machine-learning algorithms may present their findings to users: through a new device, a smartphone app, or even a method of notifying a predetermined doctor or family member of how best to support the user.

For example, imagine a technology that records that a person has recently been sleeping less, staying inside their home more, and has a faster-than-usual heart rate. These changes may be so subtle that the individual and their loved ones have not yet noticed them. Machine-learning algorithms may be able to make sense of these data, mapping them onto the individual’s past experiences and the experiences of other users. The technology may then be able to encourage the individual to engage in certain behaviors that have improved their well-being in the past, or to reach out to their physician.

If implemented incorrectly, it’s possible that this type of technology could have adverse effects. If an app alerts someone that they’re headed toward a deep depression, that could be discouraging information that leads to further negative emotions. Pedrelli and Picard are involving real users in the design process to create a tool that’s helpful, not harmful.

“What could be effective is a tool that could tell an individual ‘The reason you’re feeling down might be the data related to your sleep has changed, and the data relate to your social activity, and you haven’t had any time with your friends, your physical activity has been cut down. The recommendation is that you find a way to increase those things,’” Picard says. The team is also prioritizing data privacy and informed consent.

Artificial intelligence and machine-learning algorithms can make connections and identify patterns in large datasets that humans aren’t as good at noticing, Picard says. “I think there’s a real compelling case to be made for technology helping people be smarter about people.”