New App Empowers Drones to Enhance Farm Efficiency, Say Researchers

Researchers at the University of California, Davis, have developed a web application to help farmers and industry workers use drones and other uncrewed aerial vehicles, or UAVs, to generate the best possible data. By helping farmers use resources more efficiently, this advancement could help them adapt to a world with a changing climate that needs to feed billions.

Associate Professor Alireza Pourreza, director of the UC Davis Digital Agriculture Lab and postdoctoral researcher Hamid Jafarbiglu, who recently completed his doctorate in biological systems engineering under Pourreza, designed the When2Fly app to make drones more proficient and accurate. Specifically, the platform helps drone users avoid glare-like areas called hotspots that can ruin collected data.

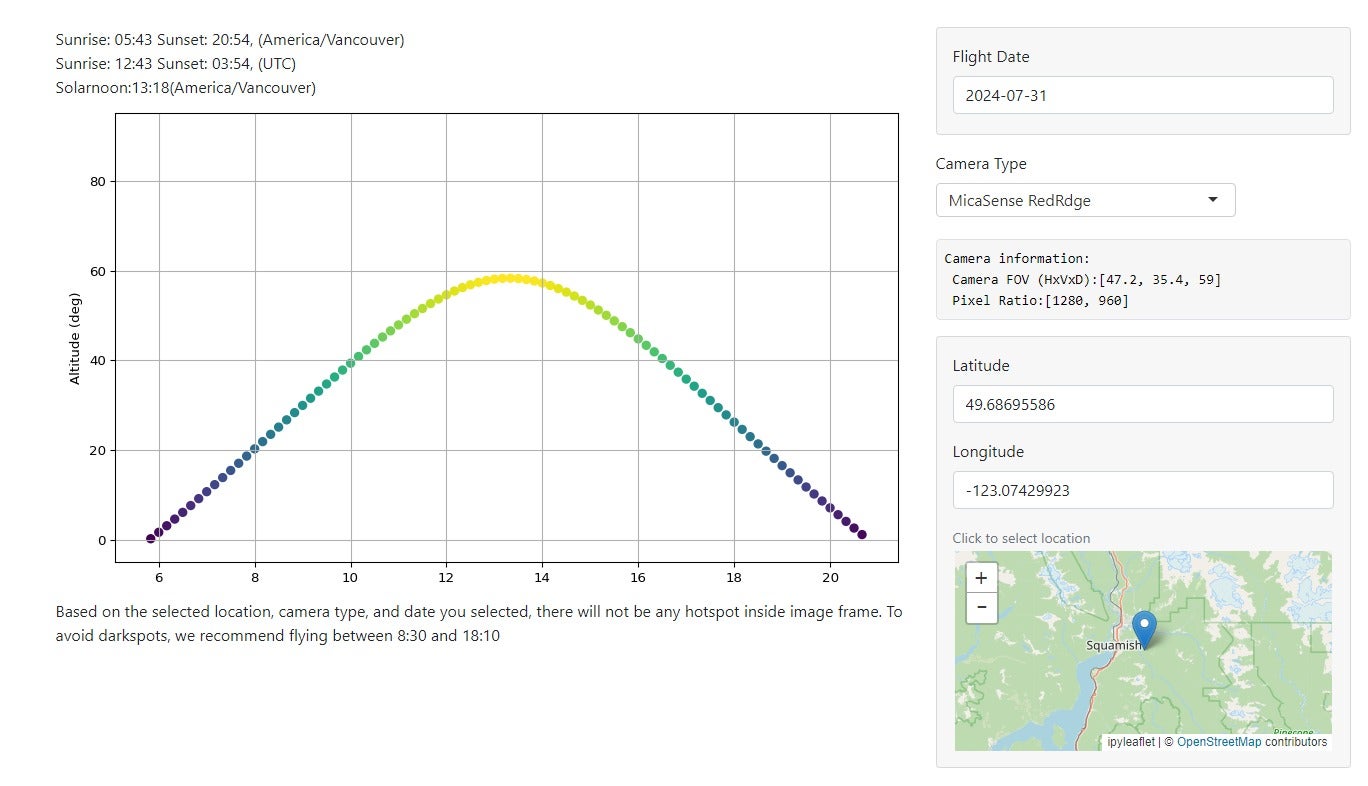

Drone users select the date they plan to fly, the type of camera they are using and their location either by selecting a point on a map or by entering coordinates. The app then indicates the best times of that specific day to collect crop data from a drone.

Jafarbiglu and Pourreza said that using this app for drone imaging and data collection is crucial to improve farming efficiency and mitigate agriculture’s carbon footprint. Receiving the best data — like what section of an orchard might need more nitrogen or less water, or what trees are being affected by disease — allows producers to allocate resources more efficiently and effectively.

“In conventional crop management, we manage the entire field uniformly assuming every single plant will produce a uniform amount of yield, and they require a uniform amount of input, which is not an accurate assumption,” said Pourreza. “We need to have an insight into our crops’ spatial variability to be able to identify and address issues timely and precisely, and drones are these amazing tools that are accessible to growers, but they need to know how to use them properly.”

Dispelling the solar noon belief

In 2019, Jafarbiglu was working to extract data from aerial images of walnut and almond orchards and other specialty crops when he realized something was wrong with the data.

“No matter how accurately we calibrated all the data, we were still not getting good results,” said Jafarbiglu. “I took this to Alireza, and I said, ‘I feel there’s something extra in the data that we are not aware of and that we’re not compensating for.’ I decided to check it all.”

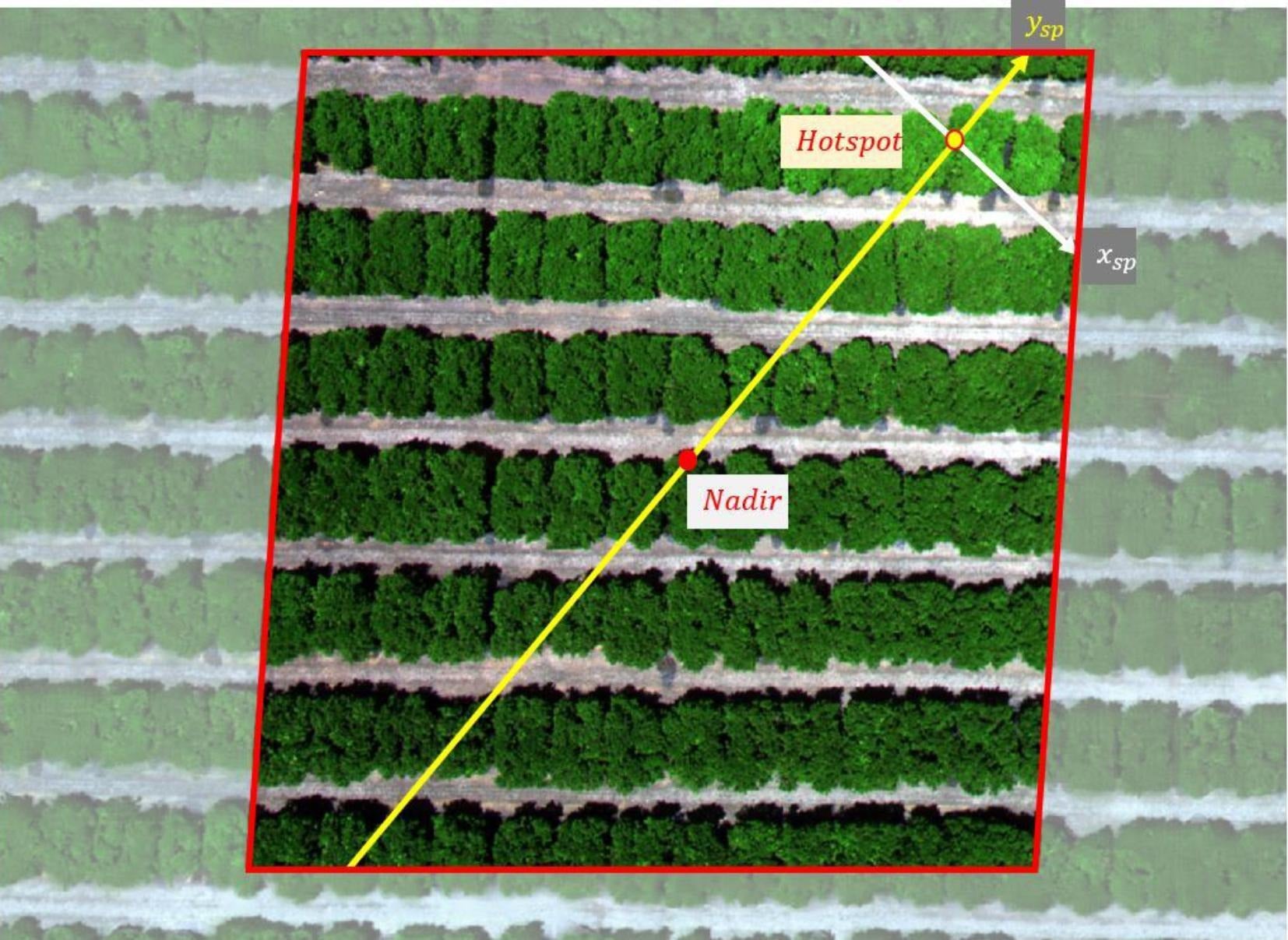

Jafarbiglu pored through the 100 terabytes of images collected over three years. He noticed that after the images had been calibrated, there were glaring bright white spots where they were supposed to look flat and uniform.

But it couldn’t be a glare because the sun was behind the drone taking the image. So Jafarbiglu reviewed literature going back to the 1980s in search of other examples of this phenomenon. Not only did he find mentions of it, but also that researchers had coined a term for it: hotspot.

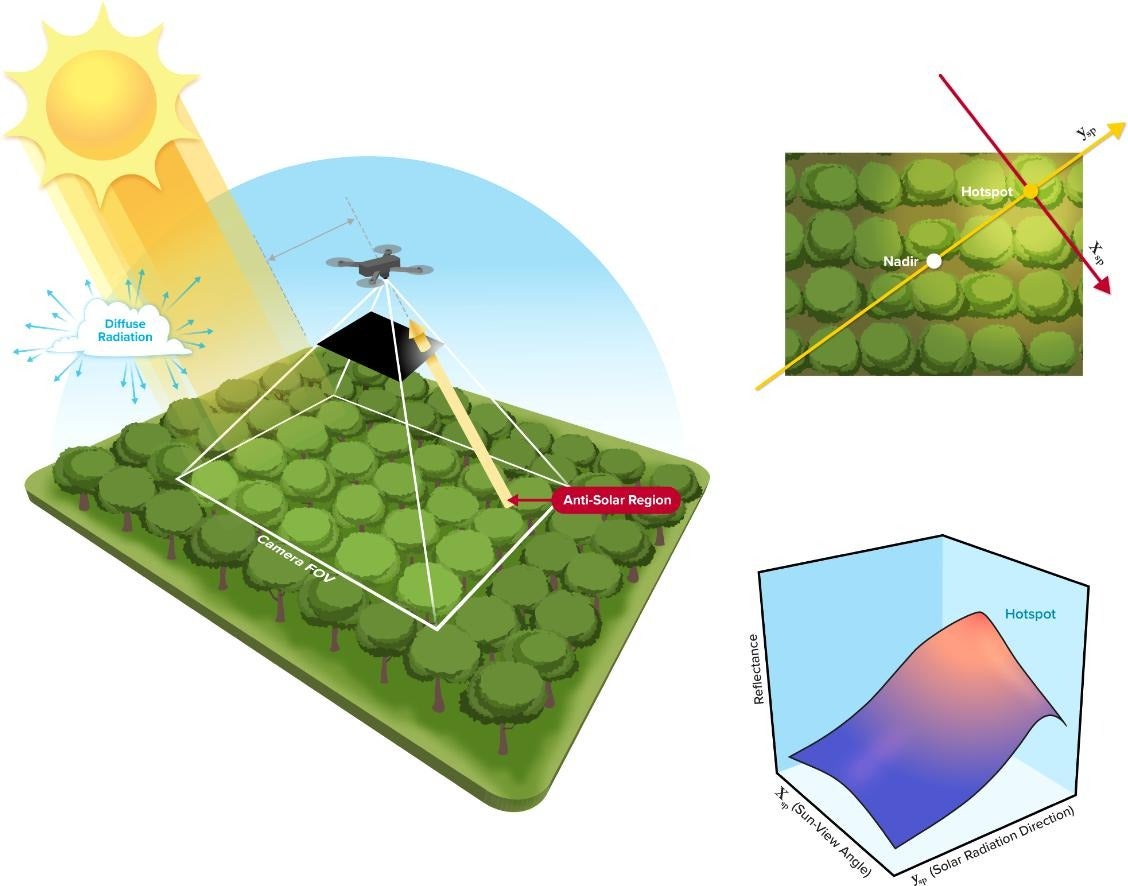

A hotspot happens when the sun and UAV are lined up in such a way that the drone is between the viewable area of the camera’s lens system and the sun. The drone takes photos of the Earth, and the resulting images have a gradual increase in brightness toward a certain area. That bright point is the hotspot.

The hotspots are a problem, Jafarbiglu said, because when collecting UAV data in agriculture, where a high level of overlap is required, observed differences in the calibrated images need to come solely from plant differences.

For example, every plant may appear in 20 or more images, each from varying view angles. In some images, the plant might be close to the hotspot, while in others it may be situated further away, so the reflectance may vary based on the plant’s distance from the hotspot and spatial location in the frame, not based on any of the plant’s inherent properties. If all these images are combined into a mosaic and data are extracted, the reliability of the data would be compromised, rendering it useless.

Pourreza and Jafarbiglu found that the hotspots consistently occurred when drones were taking images at solar noon in mid-summer, which many believe is the best time to fly drones. It’s an obvious assumption: the sun is at its highest point above the Earth, variations in illumination are minimal, if not steady and fewer shadows are visible in the images. However, sometimes that works against the drone because the sun’s geometrical relationship to the Earth varies based on location and the time of year, increasing the chance of having a hotspot inside the image frame when the sun is higher in the sky.

“In high-latitude regions such as Canada, you don’t have any problem; you can fly anytime. But then in low-latitude regions such as California, you will have a little bit of a problem because of the sun angle,” Pourreza said. “Then as you get closer to the equator, the problem gets bigger and bigger. For example, the best time of flight in Northern California and Southern California will be different. Then you go to summer in Guatemala, and basically, from 10:30 a.m. to almost 2 p.m. you shouldn’t fly, depending on the field-oriented control of the camera. It’s exactly the opposite of the conventional belief, that everywhere we should fly at solar noon.”

Grow technology, nourish the planet

Drones are not the only tools that can make use of this discovery, which was funded by the AI Institute for Next Generation Food Systems. Troy Magney, an assistant professor of plant sciences at UC Davis, mainly uses towers to scan fields and collect plant reflectance data from various viewing angles. He contacted Jafarbiglu after reading his research, published in February in the ISPRS Journal of Photogrammetry and Remote Sensing, because he was seeing a similar issue in the remote sensing of plants and noted that it’s often ignored by end users.

“The work that Hamid and Ali have done will be beneficial to a wide range of researchers, both at the tower and the drone scale, and help them to interpret what they are actually seeing, whether it’s a change in vegetation or a change in just the angular impact of the signal,” he said.

For Pourreza, the When2Fly app represents a major step forward in deploying technology to solve challenges in agriculture, including the ultimate conundrum: feeding a growing population with limited resources.

“California is much more advanced than other states and other countries with technology, but still our agriculture in the Central Valley uses technologies from 30 to 40 years ago,” said Pourreza. “My research is focused on sensing, but there are other areas like 5G connectivity and cloud computing to automate the data collection and analytics process and make it real-time. All this data can help growers make informed decisions that can lead to an efficient food production system. When2Fly is an important element of that.”