Unconventional Study Explores: Can Chopping Fruit Aid Computers in Learning

When is an apple not an apple? If you’re a computer, the answer is when it’s been cut in half.

While significant advancements have been made in computer vision the past few years, teaching a computer to identify objects as they change shape remains elusive in the field, particularly with artificial intelligence (AI) systems. Now, computer science researchers at the University of Maryland are tackling the problem using objects that we alter everyday—fruits and vegetables.

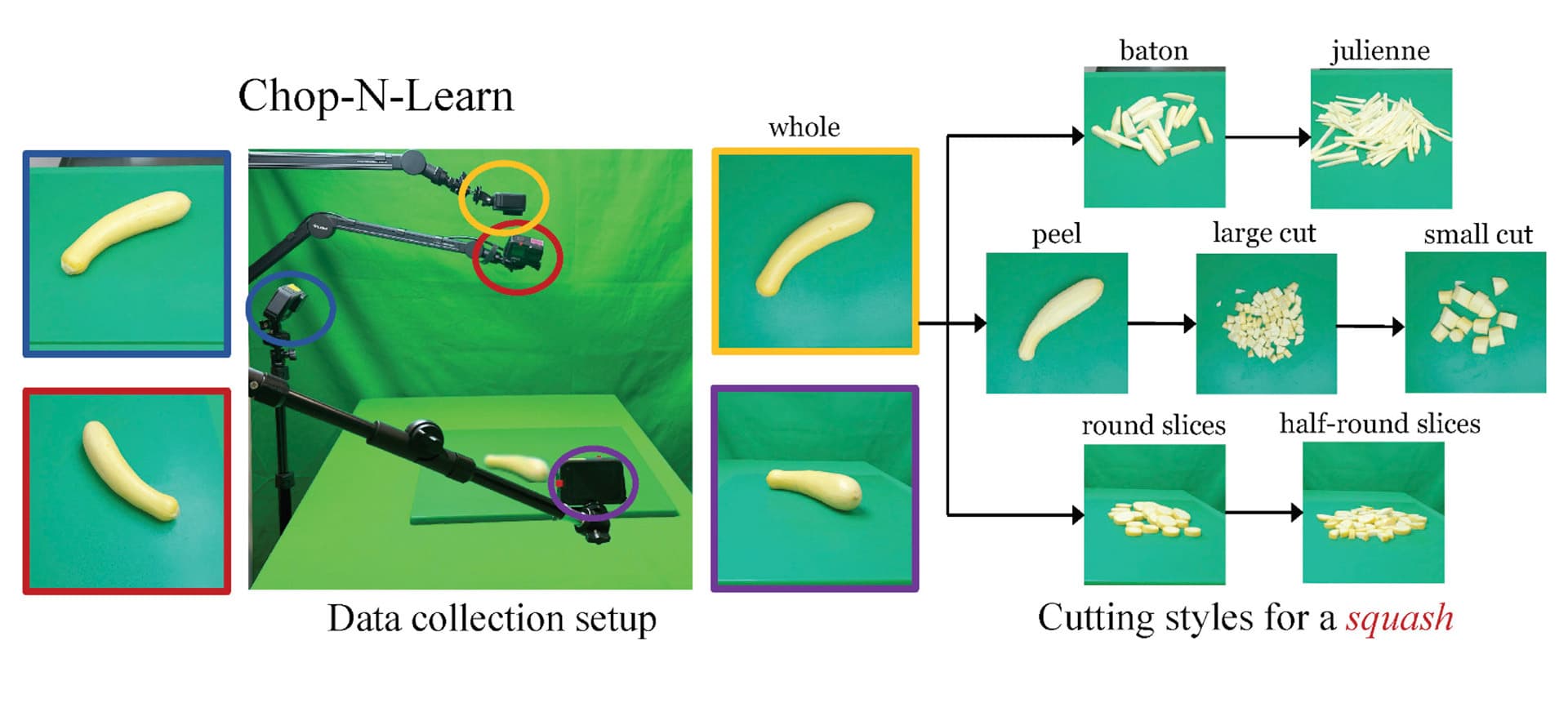

Their product is Chop & Learn, a dataset that teaches machine learning systems to recognize produce in various forms—even as its being peeled, sliced or chopped into pieces.

The project was presented earlier this month at the 2023 International Conference on Computer Vision in Paris.

“You and I can visualize how a sliced apple or orange would look compared to a whole fruit, but machine learning models require lots of data to learn how to interpret that,” said Nirat Saini, a fifth-year computer science doctoral student and lead author of the paper. “We needed to come up with a method to help the computer imagine unseen scenarios the same way that humans do.”

To develop the datasets, Saini and fellow computer science doctoral students Hanyu Wang and Archana Swaminathan filmed themselves chopping 20 types of fruits and vegetables in seven styles using video cameras set up at four angles.

The variety of angles, people and food-prepping styles are necessary for a comprehensive data set, said Saini.

“Someone may peel their apple or potato before chopping it, while other people don’t. The computer is going to recognize that differently,” she said.

[AI Melds With Musical Tradition in Violin Teaching Platform]

In addition to Saini, Wang and Swaminathan, the Chop & Learn team includes computer science doctoral students Vinoj Jayasundara and Bo He; Kamal Gupta Ph.D. ’23, now at Tesla Optimus; and their adviser Abhinav Shrivastava, an assistant professor of computer science.

“Being able to recognize objects as they are undergoing different transformations is crucial for building long-term video understanding systems,” said Shrivastava, who also has an appointment in the University of Maryland Institute for Advanced Computer Studies. “We believe our dataset is a good start to making real progress on the basic crux of this problem.”

In the short term, Shrivastava said, the Chop & Learn dataset will contribute to the advancement of image and video tasks such as 3D reconstruction, video generation, and summarization and parsing of long-term video.

Those advances could one day have a broader impact on applications like safety features in driverless vehicles or helping officials identify public safety threats, he said.

And while it’s not the immediate goal, Shrivastava said, Chop & Learn could contribute to the development of a robotic chef that could turn produce into healthy meals in your kitchen on command.